Overview

UPDATE: Check out the updated post that shows how to use the Azure Data Lake Storage connector.

Azure Data Lake Store is an enterprise-wide hyper-scale repository for big data analytic workloads. Azure Data Lake enables you to capture data of any size, type, and ingestion speed in one single place for operational and exploratory analytics.

This guide shows how easy it is to connect to Azure Data Lake Store using MuleSoft by using the webHDFS REST APIs that are exposed. Azure Data Lake Store is essentially a version of HDFS. While MuleSoft has a connector for HDFS, Azure requires authentication through Active Directory using OAuth. The guide below will show you how to setup an Azure Data Lake Store, setup an Azure Active Directory app, and connect to Azure using a pre-configured project in MuleSoft Anypoint Studio.

Requirements

- Microsoft Azure account

- MuleSoft Anypoint Studio

Create an Azure Data Lake Store

If you don’t already have an Azure Data Lake Store, these are the steps to setup one yourself. It does require that you have an Azure account. You can sign up for a 30 day account or just use the Pay-As-You-Go account which is what I used.

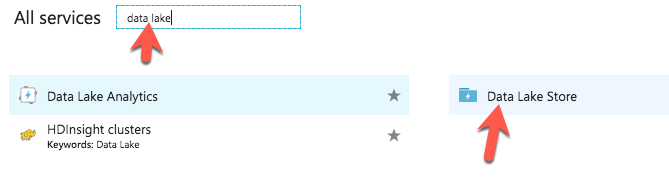

- Login to the Azure portal and click on All services on the left hand navigation bar.

- In the search field, type in data lake and click on Data Lake Store

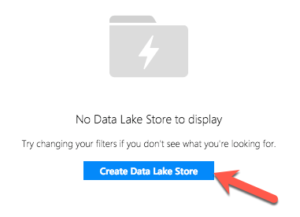

- In Data Lake Store, click on Create Data Lake Store in the center or click on Add in the top left.

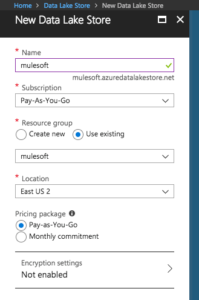

- In the New Data Lake Store window, enter the following information:

- Name: mulesoft

- Subscription: This can depend on your account. If you recently signed up, you should have a 30 day subscription. I selected Pay-As-You-Go

- Resource group:

Either create a new resource group or use an existing one. (e.g. mulesoft) - Location: leave the default East US 2

- Encryption settings: I set this to Do not enable encryption.

- Click on Create

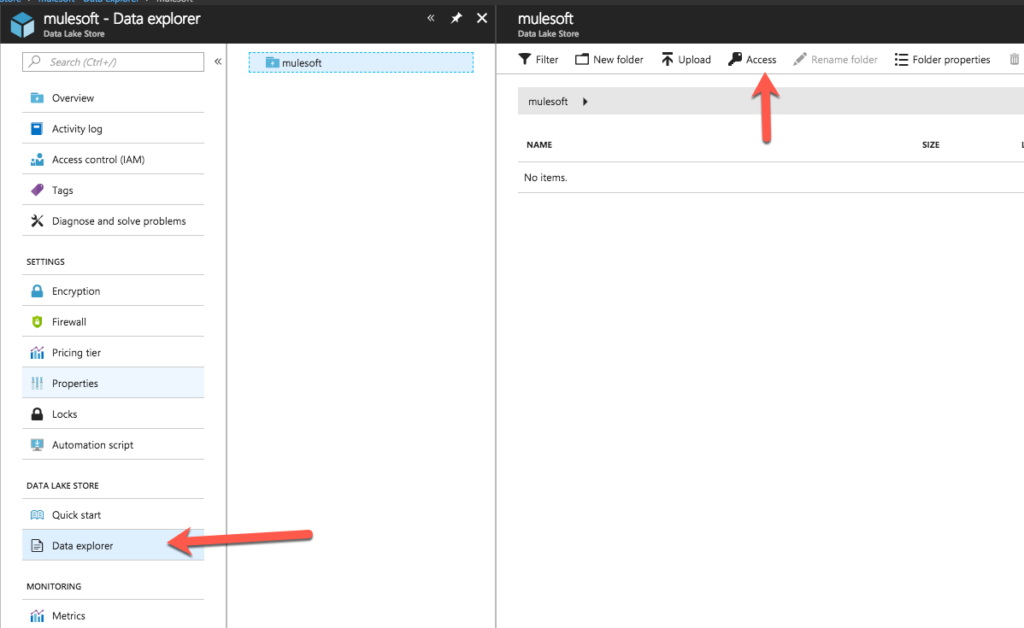

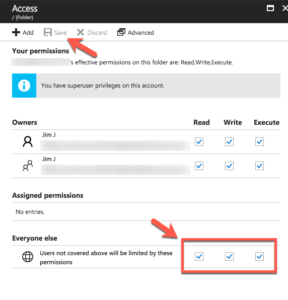

- Once the Data Lake Store is created, click on Data explorer in the left hand navigation menu. We want to grant access to all users for this demo, so click on the Access button.

- In the Access screen, check the Read, Write, and Execute checkboxes under Everyone else and then click on Save

Create an Azure Active Directory “Web” Application

- In the left hand navigation menu, click on Azure Active Directory. If the menu item isn’t there, click on All services and search for it.

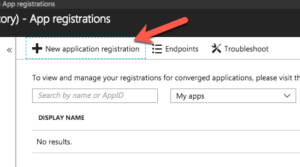

- In the navigation menu for Azure Active Directory, click on App registrations

- Click on New application registration

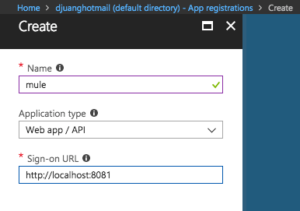

- In the Create window, enter the following data:

- Name: mule

- Application type: Keep the default Web app / API

- Sign-on URL: Just enter http://localhost:8081. This can be changed later and doesn’t affect anything in this demo.

- Click on Create

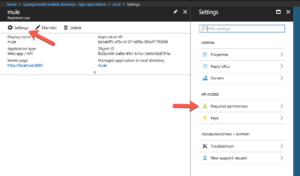

Once the app has been created, copy down the Application ID: e.g. bdcabff5-af3c-4127-b69b-38bcf1792bfd

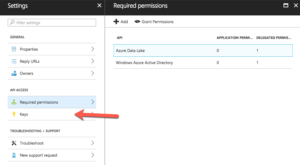

- Next, click on Settings and then click on Required permissions

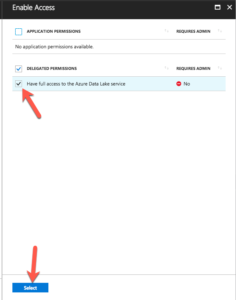

- In the Required permissions screen, click on Add and then click on Select an API. Then select Azure Data Lake from the list of available APIs and then click on Select

- In the Enable Access screen, check the Have full access to the Azure Data Lake service checkbox and then click on Select

- Next we need to generate a key. Click on Keys on the left hand navigation bar for the app settings.

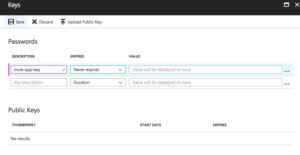

- In the Keys window, enter the following:

- Description: mule-app-key

- Expires: Set this to Never expires

- And then click on Save. A value will appear for the key. Copy that value down. e.g. +zAbZQgXomvqsfHgCH32Yv+VCvkT3ZcxRyw5CWaw4dw=

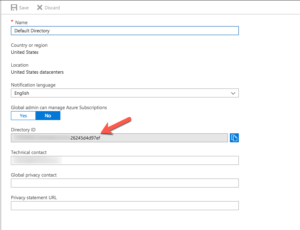

- Next, let’s get your tenant ID. In Azure Active Directory click on Properties in the left hand navigation bar.

- Copy down the Directory ID value. That’s your tenant ID that you’ll need to make the OAuth call. e.g. 57744783-79ff-49ab-b27e-26245d4d97ef

Download and Run the Mule Project

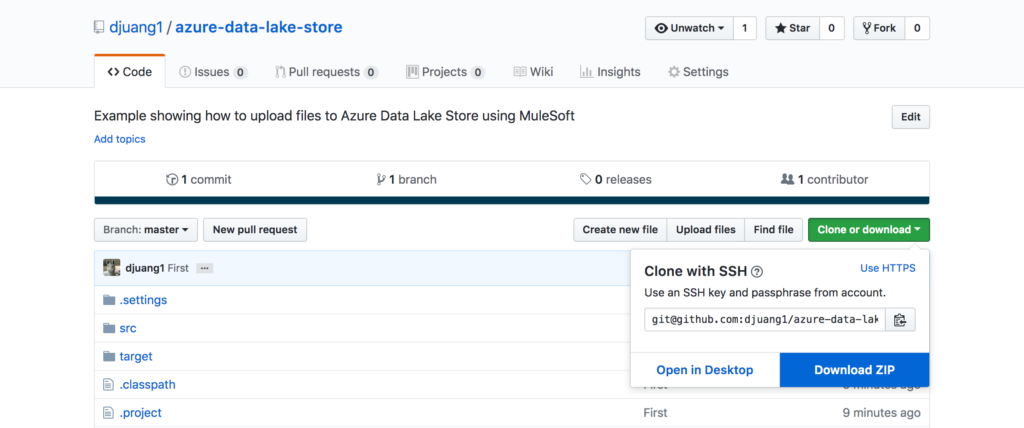

- Download the project from Github https://github.com/djuang1/azure-data-lake-store

- Import the project into Anypoint Studio

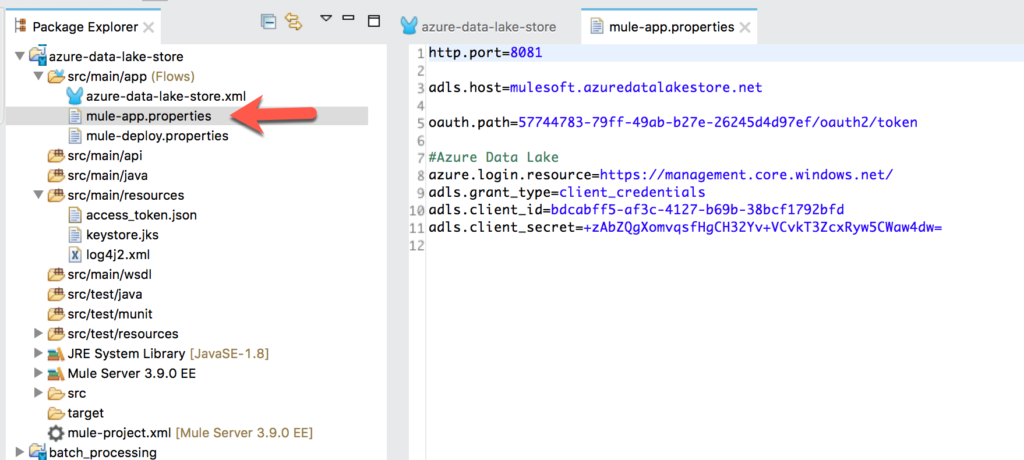

- Open the mule-app.properties and modify the following properties:

- adls.host: <name of your Data Lake Store>.azuredatalakestore.net

- oauth.path: <tenant ID from Step 2.11>/oauth2/token

- adls.client_id: This is the ID from Step 2.5

- adls.client_secret: This is the key from Step 2.9

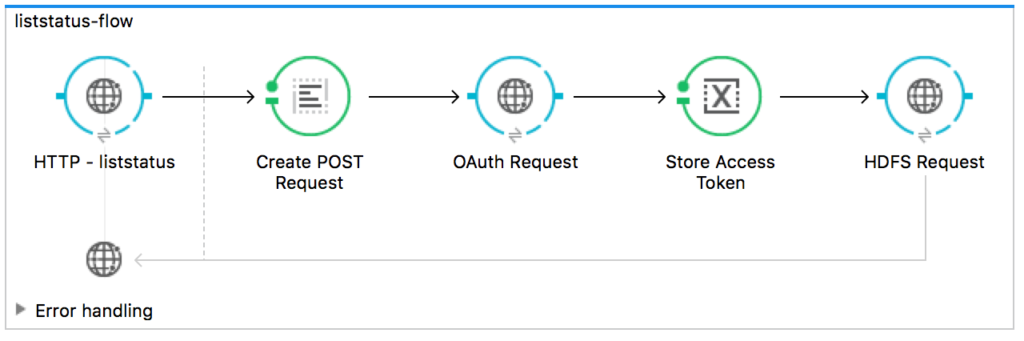

- The first flow will list the files and folders from the root directory of the Data Lake Store. Once the flow receives the request it creates the parameters to be sent to the OAuth request. If the OAuth request is successful, it returns an access token that is used to make the HDFS request to list the folders.

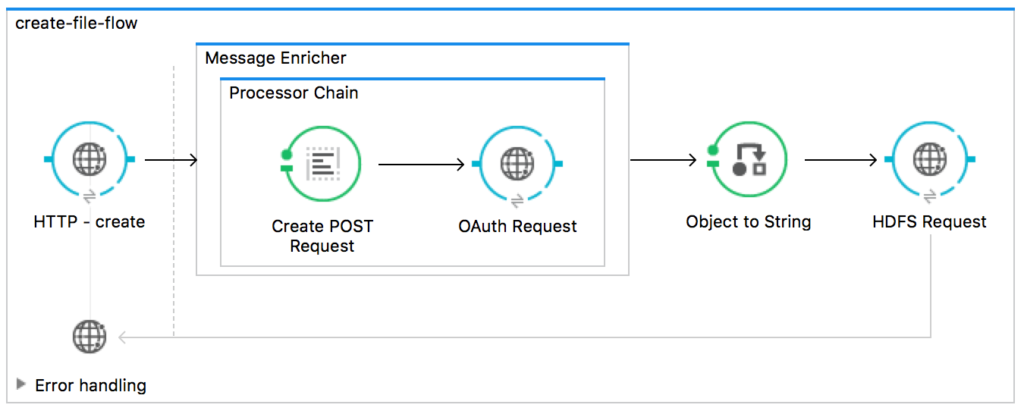

- The second flow shows how to upload data to the Data Lake Store. Similar to the first flow, it makes an OAuth request and passes the access token to make the HDFS request.

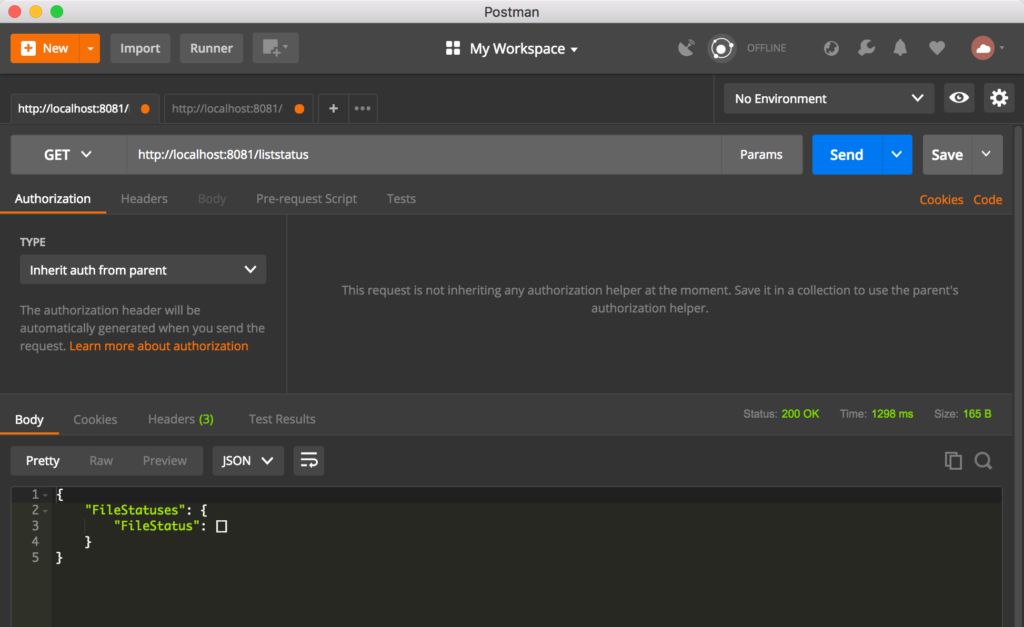

- Run the project and open up Postman.

- Let’s test the first flow. Paste the following into the request URL: http://localhost:8081/liststatus and click Send. The screenshot below shows the results. If you add some folders and files, you’ll receive more data from the API call.

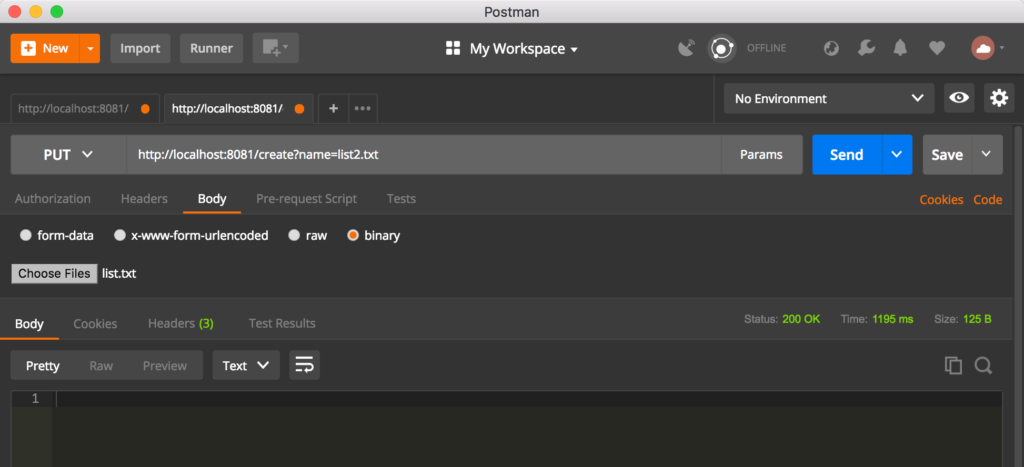

- Open another tab and paste the following URL: http://localhost:8081/create?name=list2.txt

- Change the method to PUT. Under the Body section, select the binary radio button and select the file name list.txt from the src/main/resources folder from the project. Click on Send.

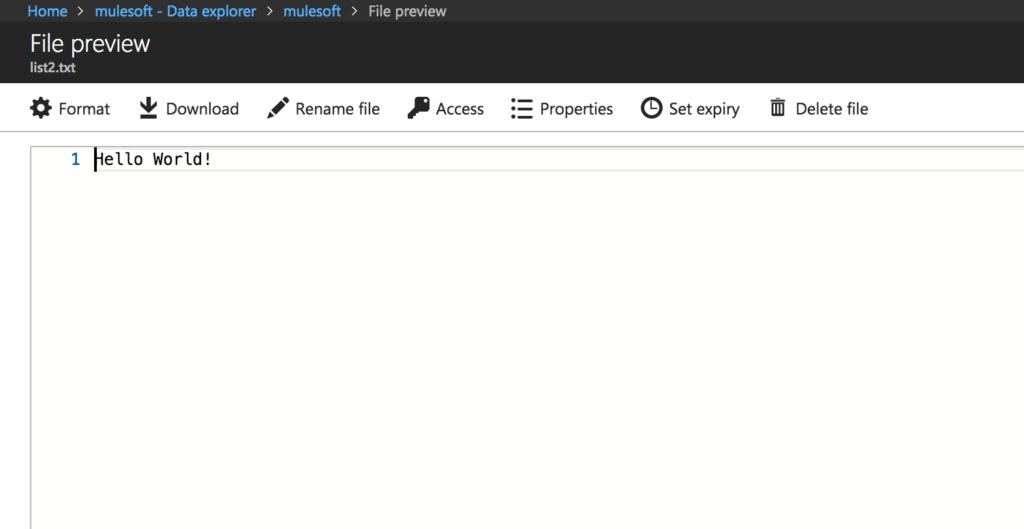

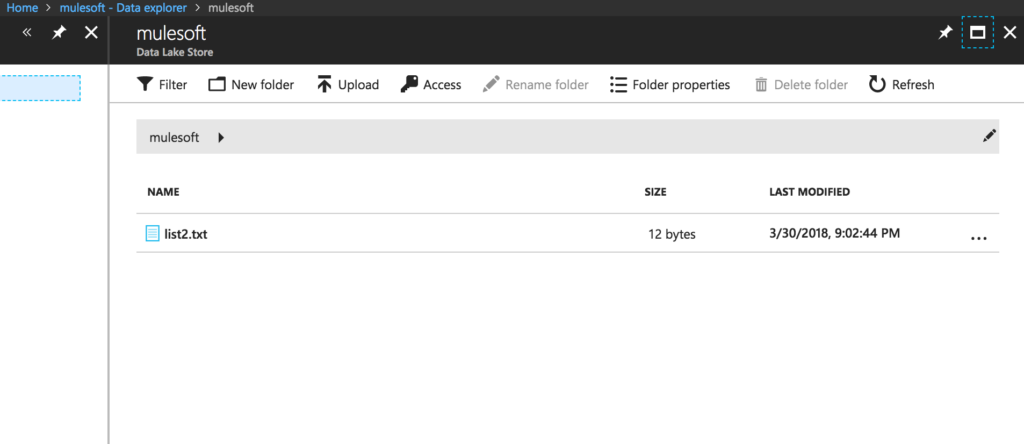

- If successful, when you switch back the Data Lake Store, you should see the file at the root directory. Click to open the file.

- You should see the following data in the file if everything was configured successfully.